FAMAD

From HP-SEE Wiki

General Information

- Application's name: Fractal Algorithms for MAss Distribution

- Application's acronym: FAMAD

- Virtual Research Community: Computational Physics

- Scientific contact: Ciprian Mihai Mitu, cmitu@spacescience.ro

- Technical contact: Ciprian Mihai Mitu, cmitu@spacescience.ro

- Developers: High Energy Astrophysics and Advanced Tehnologies, Institute of Space Sciences, Romania

- Web site: http://wiki.hp-see.eu/index.php/FAMAD

Short Description

FAMAD is a collection of algorithms to compute different fractal parameters for mass distribution. This type of algorithms are highly parallizable and can analyse large data customary on clusters. The input data will be high resolution images in the most used format in astrophysics, FITS. The application will use a box counting algorithm running on GPU (with CUDA), for the determination of fractal dimension. Data analysis and ploting will be done in ROOT Framework.

Problems Solved

Fractal algorithms are generally highly parallelizable. This application implements different algorithms on GPU (such as box counting, fractal lacunarity and correlation length) for fractal study. Due to the architecture of the graphical processors, the application will run simulatatiously thousands of threads in parallel. Thus, fractal analysis will be much faster than on CPU.

Scientific and Social Impact

General scientific image on the formation and evolution of the galaxies is that they formed by gravitational collapse of matter. The study of the fractal mass distribution of spiral galaxies will shade new light on the formation and evolution of galaxies. The application will provide new data of spiral arms and their fractalness. We hope that at the end of this study, the general scientific view of the formation and evolution of spiral galaxies will change radically.

Collaborations

- Computational astrophysics

Beneficiaries

Main beneficiaries are research groups in Computation Astrophysics

Number of users

5

Development Plan

- Concept: M1

- Start of alpha stage: M6

- Start of beta stage: M11

- Start of testing stage: M14

- Start of deployment stage: M20

- Start of production stage: M23

Resource Requirements

- Number of cores required: 4x480 GPUs, 32 CPUs

- Minimum RAM/core required: 1.5GB/video card, 2GB/core

- Storage space during a single run: 100MB

- Long-term data storage: 1TB

- Total core hours required: 300000

Technical Features and HP-SEE Implementation

- Primary programming language: C++

- Parallel programming paradigm: SIMT(CUDA) - Single Instruction Multiple Threads

- Main parallel code: CUDA, Matematica

- Pre/post processing code: CUDA, Matematica

- Application tools and libraries: ROOT framework, CUDA, Matematica

Usage Example

Infrastructure Usage

- Home system: Computing Center at ISS, located in Magurele, Romania

- Applied for access on: 02.2011

- Access granted on: 02.2011

- Achieved scalability: 2x480

- Accessed production systems:

- 1. GPU-SERVER

- Applied for access on: 03.2011

- Access granted on: 03.2011

- Achieved scalability: 4x480

- Porting activities: Yes

- Scalability studies: Yes

Running on Several HP-SEE Centres

- Benchmarking activities and results: .

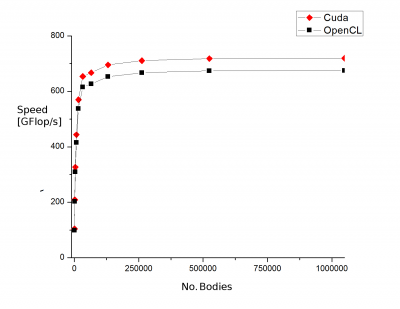

N-Body Simulation with CUDA and OpenCL

N-Body Simulation with CUDA and OpenCL

Benchmaking was done with an variation of all pairs n-body simulation diploid on GPU-server. The difference between CUDA and OpenCL is about 5-10% in favour of CUDA. The tests was been maid on 480, 960, 1440, 1920 cores.

- Other issues: Scalability is good up to 1000 cores. Further optimisation of the code will be done(memory management, interaction models, initial conditions etc).

Achieved Results

Publications

Foreseen Activities

DLA (Diffusion-limited aggregation) model implementation for N bodies