GENETATOMICS

From HP-SEE Wiki

General Information

- Application's name: Genetic algorithms in atomic collisions

- Application's acronym: Genetatomics

- Virtual Research Community: Computational Physics

- Scientific contact: Dragan Jakimovski, dragan.jakimovski@gmail.com

- Technical contact: "Boro Jakimovski, boro.jakimovski@finki.ukim.mk", Jane Jovanovski, janejovanovski@gmail.com

- Developers: Faculty of Natural Science and Mathematics, UKIM, FYROMacedonia

- Web site: http://wiki.hp-see.eu/index.php/GENETATOMICS

Short Description

The computer simulation and modeling of various processes involving highly charged ions in plasma are extremely important in contemporary physics. The knowledge of cross sections of electron capture and ionization processes as well as of corresponding sections for excitation of electron(s) in atoms and ions colliding with impurity ions in reactor plasma is valuable in calculations of other plasma parameters and diagnostics of its characteristics in experimental reactor ITER to be build in France. Of special interest are the details of cross sections for different plasma temperatures. The usage of HPC for this application will increase the performance of the genetic algorithms, allowing processing of more parameters and larger intervals in single run.

Problems Solved

The objective of our work is to calculate these multivariable functions (which arise with Schrodinger equation in general) with different values of parameters and different ranges of variables, which in many cases is computer intensive, and require high amount of CPU time. For now the code has been tested, also on various other systems of equations, prominent in physics, applied mathematics and economics

- Volter-Lotka system,

- Equations with non-analytical solutions

- GDP equations from economic theory, and

- Partial differential equations

For systems for which there is known analytical solution the comparison with the numerically obtained result is excellent.

Scientific and Social Impact

Contribution to IAEA and atomic collisions research community, as well as research groups in quantum physics in general. The availability of robust, thoroughly tested parallel genetic algorithm code for various quantum problems with different effective potentials should be beneficial to all those developers interested in independent check of their own, more specialised codes. Possible scaling of the code to large number of processors (up to, say 100) for solving more complex problems and eventually to huge number of more than 100 thousand, processors with different hardware / software environment may prove itself to have important social impact.

Collaborations

- International Atomic Energy Agency, Vienna

Beneficiaries

- Research groups working in atomic / ion collisions and quantum physics in general, for double-checking their own codes;

- Universities and educational centers with strong program in HP physics computing

Number of users

3

Development Plan

- Concept: The concept has been planned before the M1.

- Start of alpha stage: M1-M8

- Start of beta stage: M9-M10

- Start of testing stage: M11-M13

- Start of deployment stage: M14-M15

- Start of production stage: M16-M24

Resource requirements

- Number of cores required: 128 - 2048

- Minimum RAM/core required: 0.5GB

- Storage space during a single run: 10MB

- Long-term data storage: 5GB

- Total core hours required: 1 000 000

Technical Features and HP-SEE Implementation

- Primary programming language: FORTRAN

- Parallel programming paradigm: SMP and MPI

- Main parallel code: In-house development

- Pre/post processing code: In-house development

- Application tools and libraries: none, (planned usage of ATLAS, BLAS, GotoBLAS, ScaLAPAC)

Usage Example

The application is currently being used by hardcoding the new problems into the FORTRAN source. The user then recompiles the source and starts it using desired number of processes.

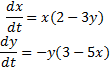

- Example 1: In order to be able to solve equations that are listed in this project, algorithm can optimize and expanded to solve nonlinear equations. As a representative sample for solving this kind of system of differential equations system is taken Walter Lotka system. We choose the system with next parameter:

Equation is solved in the interval ![]() , with initial conditions

, with initial conditions ![]() . It should be noted that this algorithm is in beta, the initial results are shown in the following figure

. It should be noted that this algorithm is in beta, the initial results are shown in the following figure

There is no way for its analytical solution, therefore we compared with NDSolve Wolfram Mathematica (with parameters to precisely resolve).

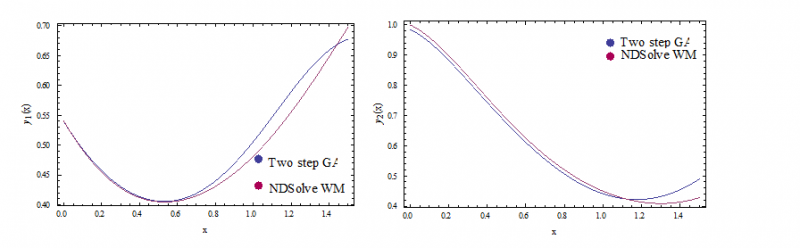

- Example 2: Solving the equation of Newton's Second Law with time variable force. Solve the following equation:

The solution is compared with the exact solution in the following figure:

Infrastructure usage

- Accessed production system: HPCG (Bulgaria)

- Applied for access on: 09.2010

- Access granted on: 09.2010

- Achieved scalability: 16 cores

- Porting activities: Moved on Home system

- Scalability studies: Moved on Home system

- Home system: HPGCC.FINKI (Macedonia)

- Applied for access on: 1.2012

- Access granted on: 1.2012

- Achieved scalability: 60 cores for the current data sets (tested on up to 504 cores). The application was tested directly, before the cluster is operational for testing purposes

- Porting activities: ongoing

- Scalability studies: ongoing

Running on several HP-SEE Centers

- Benchmarking activities and results: The application was successfuly tested for its scalability on HPCG cluster in Bulgaria. Due to long queue waiting times, the problem was not tested for scalability beyond 16 cores. On the HPGCC cluster in Macedonia, the application was used to initially test the system and achieved a scalability of 120 cores. The tests were executed over a medium data set, and we believe that it will scale even more on larger data sets. The results of the scalability measuremets are shown in the following figures:

- Other issues: .

Achieved Results

Scalability Studies

| Code author(s): Jane Jovanovski, Boro Jakimovski | |

| Application areas: Computational Physics | |

| Language: Fortan | Estimated lines of code: 2000 |

| URL: http://wiki.hp-see.eu/index.php/GENETATOMICS | |

Parallelization

We use genetic algorithms and genetic programming for developing algorithm for solving order differential equation (single or system of ODE). One of the main characteristics of genetic algorithms and genetic programming as techniques for implementation of evolutionary paradigms is their exceptional ability to be parallelized. This comes from the fact that the individuals can be evaluated in parallel as their performance rarely, if ever, affects that of other individuals. There are numerous ways for paralelization of genetic algorithms, but here we will consider the following two techniques:

- Island GA: The population is divided on several subpopulations - islands, each subpopulation is a population on its own and is developed on a separate processor. After a certain number of generations, all subpopulations are gathered together into a single population to get mixed, after which they are resent to the processors.

- Paralelization of the fitness function: The most used operation in the evolutionary algorithms is an evaluation of the fitness value of each of the chromosomes. The fitness value is evaluated during selection, after crossover, after mutation. Therefore, this operation takes most of the processor time. There are different techniques for paralelization of the fitness function depending on its shape.

We used the Message Passing Interface (MPI) standard for building the parallel evolutionary algorithm. We tested efficient of parallelism with different type of MPI but we found that version of MPI is not related with scalability results. For different version of MPI we got similar results. The current population consists of chromosomes which contain as many stacks as there are equations in the system that is being solved, and each of the stacks that denotes a postfix representation of the function is represented by a stack with a variable size. For example if we solve single ODE we have a chromosome with one stack, but if we solve system of three ODEs we have chromosome with 3 stacks. As a result of the dynamic size of the arrays and due to the fact that MPI cannot deal with arrays with variable size, it is impossible to divide the population on smaller islands to be sent to the corresponding processors. This reason led us to the implementation of the second technique for paralelization of the fitness function.

New way to measure relative scalability of algorithm

The inovative approach was adopted for measuring the speed-up of parallel implementation of the algorithm due to its stochastic nature. With different runs of the code one gets different functions with different evaluation times. To smooth out this inherent stochasticity of the genetic algorithm we modified the expression for speed-up relative nonparallel case to

where ![]() equals time to develop the

equals time to develop the ![]() generation with

generation with ![]() processors,

processors, ![]() equals time to develop

equals time to develop ![]() generation

with one processor,

generation

with one processor, ![]() equals the number of equations in the system,

equals the number of equations in the system, ![]() equals the mean value of the stack for

whole population for

equals the mean value of the stack for

whole population for ![]() generation as represents the

generation as represents the ![]() equation of the system when the algorithm execution

falls on one processor,

equation of the system when the algorithm execution

falls on one processor, ![]() is the mean value of the stack, for whole population for

is the mean value of the stack, for whole population for ![]() generation, as

represents the

generation, as

represents the ![]() equation of the system when the algorithm runs on

equation of the system when the algorithm runs on ![]() processors.

processors.

Benchmark dataset

For measuring scalability of algorithm we chose to solve one ODE. We choose next equation:

We choose step of 0,002, so we must develop fitness function in 1001 points. Our algorithm is based on two genetic algorithm, parameter of algorithm follows:

- Main genetic algorithm

- Number of generations: 12

- Population size: 50

- Mutation rate: 5%

- Cross over rate: 85%

- Sub genetic algorithm:

- Number of generations: 10

- Population size: 75

- Mutation rate: 5%

- Cross over rate: 50%

Hardware platforms

The application was tested only on HPGCC cluster with Intel Xeon L5640 CPU @2.26 Ghz

Execution times

For measuring speed – up of algorithm we measure time needed for develop 15th generation. After that we use our equation for calculate speed up of algorithm. The results is shown in next tables.

Memory Usage

Maximum memory usage per node is 2.5GB. Active memory is only 30MB per process, but no matter the number of processes per node are started, totoal memory usage for the processes on one node is 2.5GB.

Profiling

Profiling was performed on the sequential algorithm in order to detect the initial CPU utilization code. The MPI application profiling will be done in the following months.

Communication

The MPI communication totals to 200MB/s over QDR Infiniband for 48 processes.

I/O

The input and output of algorithm is small. Input contains: system of ODE or single ODE, initial condition of ODE, interval in which we solve the equation, parameters related with two genetic algorithm. The output is not depend of input parameter. The output is txt file with the best results for each generation. Also for each generation we measure same specific characteristic of population, like: time needed for developed generation, average size of stack size, constant for the best solution etc., therefore the output file is less than 1MB.

CPU and cache

No CPU level analysis was done.

Analysis

From our testing we concluded that hyperthreading should be used when available. For future work it remains to make MPI profiling and if needed to find an efficient strategy of reordering of the computations in order to achieve more stable computational times.

Publications

- J Jovanovski, B Jakimovski and D Jakimovski, Parallel Genetic Algorithms for Finding Solution of System of Ordinary Differential Equations, presentation at ICT Innovations 2011 conference

- J Jovanovski, B Jakimovski and D Jakimovski, Improvements of the Parallel Evolutionary Algorithm for finding solution of a system of ordinary differential equations, The 9th Conference for Informatics and Information Technology (CIIT 2012)

- J Jovanovski, B Jakimovski and D Jakimovski, Parallel Genetic Alghorithms for Finding Solution of System of Ordinary Differential Equations, in L. Kocarev (Ed.) ICT Innovations 2011, Advances in Intelligent and Soft Computing, Springer, 2012, Volume 150/2012, 227-237

Foreseen Activities

- Implementation of a hybrid version using OpenMP

- Implementation of a more configurable application that will accept the problem functions using a configuration file without influencing performance

- Making changes in the code to incorporate partial differential equations as well

- Implementation, testing and production of the code for two-heavy-centers-one-electron system

- Algorithm for solving an ordinary differential equation. Reconstruction of equation for calculate relative sociability for this type of algorithm.

- Algorithm for solving an partial differential equation. Reconstruction of equation for calculate relative sociability for this type of algorithm.