OpenACC Use Case

From HP-SEE Wiki

Contents |

Effort required

To get an estimate overview of the effort required to port an existing code into a GPU enabled infrastructure using OpenACC directives we have used as a basis a simple matrix-matrix multiplication algorithm.

As is shown in Listing 1, the changes required to parallelize a for-loop that is taking care of the matrix matrix multiplication are rather minimal (as is the case also when using OpenMP). Note that the input and output chunks of memory to be transferred to and from the GPU card should be explicitly defined within the pragma statement or using the data construct. (A similar syntax is required in the FORTRAN case). In case the memory transfers are not specified, these will be handled automatically by the compiler.

The kernel construct specifies a region of the code which will be executed in parallel. The compiler handles the parallelization automatically, identifying the loops that will be executed in parallel and generating kernels which run on the accelerator. Additionally, the compiler will choose the mapping of threads and blocks.

In OpenACC terms, gang parallelism maps to grid-level parallelism, and vector parallelism maps to thread-level parallelism [1]. The iterations of the loop are broken into vectors and vectors are executed in parallel by SMPs of the GPU [2].

Listing 1

#pragma acc kernels copyin(a,b) copyout(c)

for (i = 0; i < SIZE; i++) {

for (j = 0; j < SIZE; j++) {

for (k = 0; k < SIZE; k++) {

c[i][j] += a[i][k] * b[k][j];

}

}

}

The parallel analysis that is provided by the compiler can be further augmented and tuned by adding directives and clauses for the definition of loop independent regions that contain work sharing. If not explicitly defined the compiler will make heuristic assumptions on how work sharing should be performed but the developer should after all implement the best approach depending on the given problem. Fine tuning of the parallelization strategy to be implemented on top of the GPU resources requires the addition of more pragmas. In the example given in Listing 2 we explicitly define a sequential inner loop and then group the outer loop into chunks/vectors of 512 threads to be executed on the GPU concurrently.

Listing 2

#pragma acc kernels loop copyin(a,b) copyout(c)

{

#pragma acc loop independent gang (512)

for (i = 0; i < SIZE; i++) {

#pragma acc loop independent vector(512)

for (j = 0; j < SIZE; j++) {

#pragma acc loop seq

for (k = 0; k < SIZE; k++) {

c[i][j] += a[i][k] * b[k][j];

}

}

}

}

The parallel construct, similarly to the kernel construct, indicates a region of the code to be launched in parallel, however it is more explicit than the kernel construct and requires some further analysis by the programmer. Usage of the parallel construct has also been tested within our case study. The achieved performance results are very close to the corresponding results when using the kernel construct.

Benchmark tests

To get an estimate overview of the performance gain (in comparison to the plain CPU algorithm) we have used a Tesla M2090 GPU card on HPCG site. Our CPU results have been obtained on the same site and in specific on an Intel Xeon E5649 (2.53GHz) CPU.

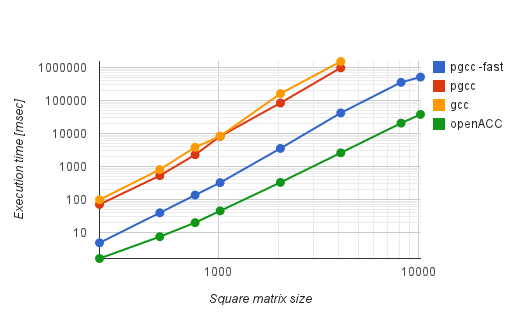

In Figure 1 we compare our results when using the non-OpenACC code (compiled with gcc and pgi without any optimization options and pgi with the “-fast” optimization option which performs a vectorization of the matrix product on the CPU).

Figure 1 - Comparison of execution times for simple matrix matrix multiplication

As can be seen the simple OpenACC approach (given in Listing 1) outperforms the serial execution times as measured on the CPU even in the case the compiler performs some vectorization of the product behind the curtains.

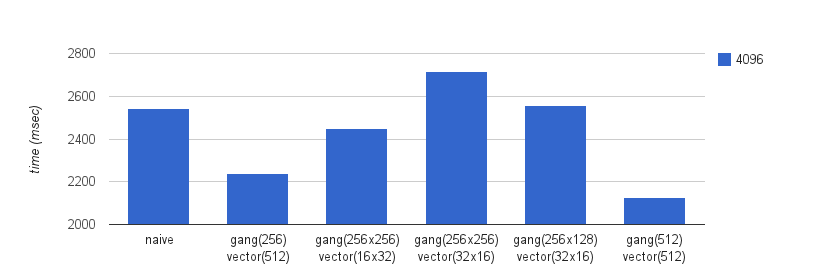

Going into further detail we have measured the execution time of the OpenACC code given in Listing 2 for various values of gang and vector sizes. Our results for a given size of the square matrices multiplies (4096) are shown in Figure 2 below.

Figure 2 - Comparison of different configurations for gang and vector sizes for a square size matrix matrix multiplication of size 4096

The best timing results for this multiplication size have been retrieved for gang and vector sizes of 512.

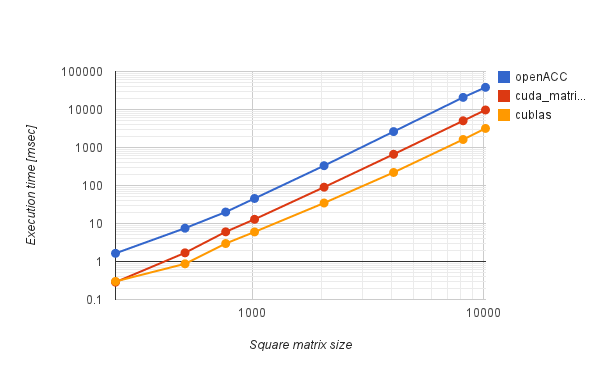

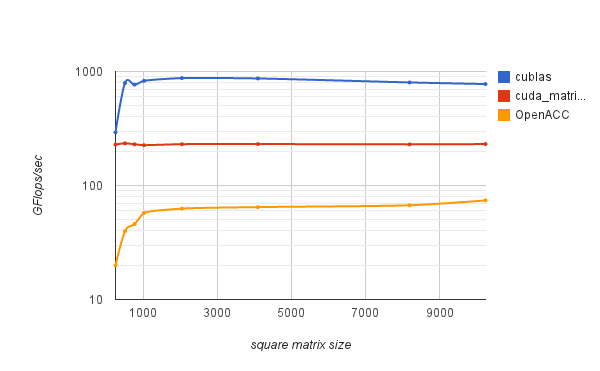

These results, however, are only partial as there are several approaches towards implementing an efficient matrix matrix multiplication that should be preferred to writing an own code. Thus in Figures 3 and 4 we provide benchmarking results and performance results where we compare our more advanced OpenACC implementation (Listing 2) to

- a simple (again) Cuda implementation and

- a CuBLAS implementation

Figure 3 - Comparison of execution times

Figure 4 - Comparison of performance achieved

As can be seen from these results the plain CUDA example code and the CuBLAS implementations clearly outperform the simple OpenACC example code we have implemented even after fine tuning the parameters of the problem to their optimal values.

Conclusions

In conclusion OpenACC proves very helpful in achieving an improved performance on a GPU enabled infrastructure as it requires a minimal amount of effort to code and implement. The documentation is quite extensive and even a beginner in parallel programming can start off and have remarkable results in a very short time. However, to achieve better results CUDA and the so far supported and implemented libraries (if such libraries are applicable to a given problem) should be preferred as these exhibit far better usage of the underlying resources.