EagleEye

From HP-SEE Wiki

General Information

- Application's name: Feature Extraction from Satellite Images Using a Hybrid Computing Architecture

- Application's acronym: EagleEye

- Virtual Research Community: Computational Physics

- Scientific contact: Emil Slusanschi, emil.slusanschi@cs.pub.ro

- Technical contact: Nicolae Tapus, nicolae.tapus@cs.pub.ro

- Developers: Cosmin Constantin, Razvan Dobre, Alexandru Herisanu, Ovidiu Hupca, Betino Miclea, Alexandru Olteanu, Emil Slusanschi, Vlad Spoiala, University Politehnica of Bucharest, Computer Science and Engineering Department, Romania

- Web site: http://cluster.grid.pub.ro/

Short Description

The topic of analyzing aerial and satellite images for the purpose of extracting useful features has always been an interesting area of research in the field of machine vision. From identifying terrain features like forests, agricultural land, waterways and analyzing their evolution over time, to locating man-made structures like roads and buildings, the possible applications are numerous.

Problems Solved

The purpose of the application is to use the Cell B.E. hybrid architecture to speed up the tasks, by employing relatively simple algorithms yielding good results. Two different subtasks are treated: identifying straight roads (based on the Hough transform), and terrain identification (using the technique of texture classification). These techniques give good results and are sufficiently intensive computational to take advantage of parallelization and Cell B.E. acceleration. However, in order to obtain good results, powerful computationally intensive algorithms must be used. Also, the large datasets usually processed require significant time and computer resources. To address this problem, parallel computing is employed. By splitting the load on multiple nodes, computation time is reduced and, depending on the algorithms used, the application can scale in an almost linear fashion. The IBM Cell Broadband Engine (Cell B.E.) is used as a middle ground between the two categories of general purpose CPUs and dedicated signal processors. It has one Power Processor Element (PPE) and 8 Synergistic Processor Elements (SPEs). The SPEs are optimized for running compute intensive single-instruction, multiple-data (SIMD) applications. By converting the processing algorithms to the Cell B.E. SIMD architecture and running them on its 8 SPEs, a significant speed up is achieved as compared to a general purpose X86 CPU. The downside is that in order to take advantage of the Cell B.E. capabilities the original code cannot just be recompiled, but the algorithm must also be adapted to the new architecture. This is usually not a trivial matter and takes a significant amount of time and engineering.

Scientific and Social Impact

The application will allow for automatic detection and classification of features in large datasets of high-resolution satellite images. The analysis of satellite images with the ability to features like forests, agricultural land, and waterways will allow for the development of applications with significantly improved capabilities.

Collaborations

Romanian National Meteorological Agency: http://www.meteoromania.ro/index.php?id=0&lang=en

Beneficiaries

Romanian National Meteorological Agency: http://www.meteoromania.ro/index.php?id=0&lang=en

Number of users

12

Development Plan

- Concept: M1 - 09.2010.

- Start of alpha stage: M1-M5. 10.2010-02.2011

- Start of beta stage: M5-M7. 02-05.2011

- Start of testing stage: M7-M9.05-07.2011

- Start of deployment stage: M9-M12. 07-09.2011

- Start of production stage: M12-M24. 10.2011-09.2012

Resource Requirements

- Number of cores required for a single run: 224

- Minimum RAM/core required: 2GB/Core

- Storage space during a single run: 50-70GB

- Long-term data storage: 70-100GB

- Total core hours required: 700 000.

Technical Features and HP-SEE Implementation

1. Needed development tools and compilers: 2. Needed debuggers: 3. Needed profilers: 4. Needed parallel programming libraries: Cell/B.E. SDK 3.1, PThreds, MPI 2.0 (OpenMPI 1.5.3), GPU Programming - CUDA/OpenCL 5. Needed libraries: OpenCV 2.3.1 6. Main parallel code: Feature extraction code, 3D Visualization Code 7. Pre/post processing code: None 8. Primary programming language: C/C++

Usage Example

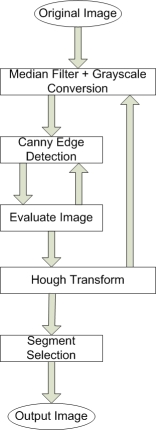

The first algorithm from which we started can be split up into four parts: applying a median filter on the original image, grayscale conversion, applying Canny edge detection on the blurred black and white image and applying the Hough transform on the image resulted from canny edge detection. The result is a set of line segments representing possible roads that get mapped onto the original image. The execution flow is presented in the following picture:

An improved implementation adapts the Canny Edge Detector thresholds based on the input images. The median filter size is between 5 and 13 with a fixed step of two. Also, the Hough transform parameters are derived in very simple manner from the median filter size: segment size is filter size plus 5, while maximum segment distance is 2 (static assignment). Also, the criteria function for selecting and linking segments in the Hough transform still has some hard coded parameters. The Segment Selection step only filters a small number of false positives. Linking road segments that are far apart is difficult in this stage because of the large number of false positives obtained from the Hough transform step. Also, a metric for linking road segments and filtering out lines between fields that are closer seems difficult to achieve at this point. The improved algorithm is displayed in the following diagram:

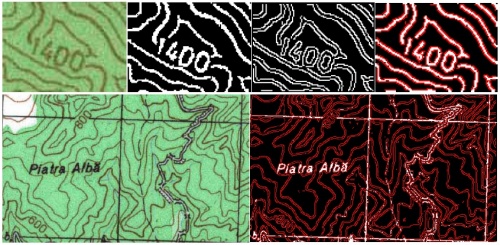

Examples of processed images employing the improved algorithm are given below:

As can be seen, there are still some false-positives and some recognition issue to be solved by our development team.

Infrastructure Usage

- Home system: NCIT Cluster

- Applied for access on: 09.2010.

- Access granted on: 09.2010.

- Achieved scalability: 224 cores.

- Accessed production systems: 1

- Applied for access on: 09.2010.

- Access granted on: 09.2010.

- Achieved scalability: 224 cores.

- Porting activities: Completed.

- Scalability studies: The envisaged scalability of 224 cores was achieved.

Running on Several HP-SEE Centres

- Benchmarking activities and results: Due to the sensitive nature of the that is analyzed, we can not run our application on other HP-SEE Centres.

- Other issues: None.

Achieved Results

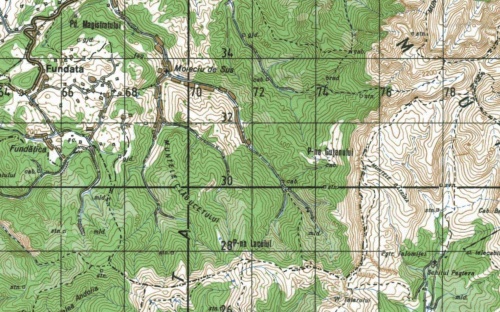

The recognition and extraction of features like contour lines, roads, forest cover and text from scanned topographical maps poses a problem due to the presence of complex textured backgrounds and information layers overlaid one on top of the other. Color analysis is also complicated by the poor quality of the images produced by the scanner and the use of dithering in the map printing process. Furthermore, we develop a system that extracts the information contained in topographic maps and generates separate layers for the different types of features these maps present. A very important aspect of information extraction from topographic maps is represented by the ability to identify regions of text and correctly recognize the character information. A sample topographic map is given below:

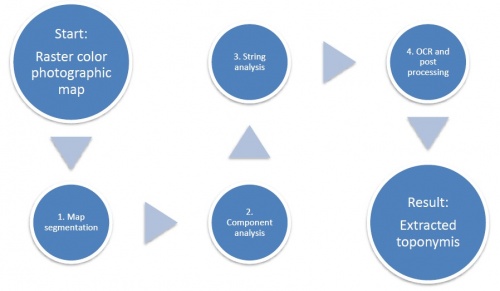

Given a color raster map, the aim of our method is to produce automatically a vectorized representation of the textual layer it contains. The proposed method is divided into four main steps:

- Map segmentation must first turn the input color map into a binary map in which characters are isolated from other features and characters.

- In the component analysis step, connected components are generated and filtered.

- Then, strings are created by grouping close connected components with similar properties.

- Finally, detected strings are recognized by an OCR before being checked and written into a text file.

The chart flow of the full process is depicted in the following figure:

For optimal results we combined the output of these two filters using the Canny filter as a mask for the first RGB color based selection filter. In the figure bellow the first image is the original image, the second is the output of RGB color select filter, the third one is output from the Canny filter and the last one shows the combined result. In the following picture we present example of processing brown and black color contours:

Publications

- „Towards efficient video compression using scalable vector graphics on the Cell/B.E.”, A. Sandu, E. Slusanschi, A. Murarasu, A. Serban, A. Herisanu, T. Stoenescu, International Conference on Software Engineering, IWMSE '10: Proceedings of the 3rd International Workshop on Multicore Software Engineering, Cape Town, South Africa, 2010, pag. 26-31. ISBN: 978-1-60558-964-0.

- „Cell GAF - a genetic algorithms framework for the Cell Broadband Engine”, M. Petcu, C. Raianu, E. Slusanschi, Proceedings of the 2010 IEEE 6th International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, ISBN: 978-1-4244-8228-3, 2010.

- „Parallel Numerical Simulations in Aerodynamics”, Marius Poke, E. Slusanschi, Damian Podareanu, Alexandru Herisanu, to appear in Proceedings of the 18th International Conference on Control Systems and Computer Science (CSCS18), May, 2011.

- „Airflow Simulator Heat Transfer Computer Simulations of the NCIT-Cluster Datacenter”, A. Stroe, E. Slusanschi, A. Stroe, S. Posea, to appear in Proceedings of the 18th International Conference on Control Systems and Computer Science (CSCS18), May, 2011.

- „Mapping data mining algorithms on a GPU architecture: A study”, A. Gainaru, S. Trausan-Matu, E. Slusanschi, to appear in Proceedings of the 19th International Symposium on Methodologies for Intelligent Systems, Warsaw, Poland, June 2011.

Foreseen Activities

- The detection algorithms that are implemented so far in the EagleEye framework have the following limitations:

- The size of the median filter is the same regardless of the contents of the input image

- The size of the Gaussian filter used in the Canny Edge Detector is static

- The two thresholds in Canny edge detection are also static

- The parameters used in the Hough transform (minimal segment distance, maximum segment size) are also static

- The criteria by which the segments are chosen and linked in the Hough transform also uses some static values

- There is a large number of false positives - usually lines between fields with different colors are also marked as roads roads that have a small width are not properly detected

- Because of these limitations we are actively developing an algorithm that dynamically adapts some of the previous hard coded parameters with respect to image content and also tries to do more filtering of false positives.