Examples for application scalability description

From HP-SEE Wiki

Contents |

| Code author(s): Team leader Emanouil Atanassov | |

| Application areas: Computational Physics | |

| Language: C/C++ | Estimated lines of code: 6000 |

| URL: http://wiki.hp-see.eu/index.php/SET | |

Implemented scalability actions

- Our focus in this application was to achieve the optimal output from the hardware platforms that were available to us. Achieving good scalability depends mostly on avoiding bottlenecks and using good parallel pseudorandom number generators and generators for low-discrepancy sequences. Because of the high requirements for computing time we took several actions in order to achieve the optimal output.

- The parallelization has been performed with MPI. Different version of MPI were tested and we found that the particular choice of MPI does not change much the scalability results. This was fortunate outcome as it allowed porting to the Blue Gene/P architecture without substantial changes.

- Once we ensured that the MPI parallelization model we implemented achieves good parallel efficiency, we concentrated on achieving the best possible results from using single CPU core.

- We performed profiling and benchmarking, also tested different generators and compared different pseudo-random number generators and low-discrepancy sequences.

- We tested various compilers and we concluded that the Intel compiler currently provides the best results for the CPU version running at our Intel Xeon cluster. For the IBM Blue Gene/P architecture the obvious choice was the IBM XL compiler suite since it has advantage versus the GNU Compiler Collection in that it supports the double-hammer mode of the CPUs, achieving twice the floating point calculation speeds. For the GPU-based version that we developed recently we relay on the C++ compiler supplied by NVIDIA.

- For all the choosen compilers we performed tests to choose the best possible compiler and linker options. For the Intel-based cluster one important source of ideas for the options was the website of the SPEC tests, where one can see what options were used for each particular sub-test of the SPEC suite. From there we also took the idea to perform two-pass compilation, where the results from profiling on the first pass were fed to the second pass of the compilation to optimise further.

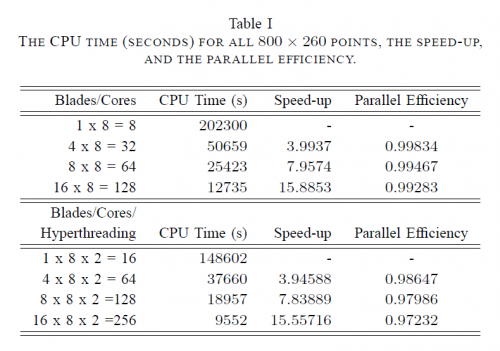

- For the HPCG cluster we also measured the performance of the parallel code with and without hyperthreading. It is well known that hyperthreading does not always improve the overall speed of calculations, because the floating point units of the processor are shared between the threads and thus if the code is highly intensive in such computations, there is no gain to be made from hyperthreading. Our experience with other application of the HP-SEE project yields such examples. But for the SET application we found about 30% improvement when hyperthreading is turned on, which should be considered a good results and also shows that our overall code is efficient in the sense that most of it is now floating point computations, unlike some earlier version where the gain from hyperthreading was larger.

- For the NVIDIA-based version we found that we have much better performance using the newer M2090 cards versus the old GTX295, which was to be expected because the integer performance of the GTX 295 is comparable to that of M2090, but the floating performance of the GTX is many times smaller.

Benchmark dataset

For the benchmarking we fixed a particular division of the domain into 800 by 260 points, electric field of 15 and 180 femto-seconds evolution time. The computational time in such case becomes proportational to the number of Markov Chain Monte Carlo trajectories. In most tests we used 1 billion (10^9) trajectories, but for some tests we decreased that in order to shorten the overall testing time.

Hardware platforms

HPCG cluster and Blue Gene/P supercomputer.

Four distinct hardware platforms were used:

- the HPCG cluster with Intel Xeon X5560 CPU @2.8 Ghz,

- Blue Gene/P with PowerPC CPUs,

- our GTX 295-based GPU cluster (with processors Intel Core i7 920)

- our new M2090-based resource with processors Intel Xeon X5650.