Libraries usage

From HP-SEE Wiki

Contents |

mpiBLAST

Section to be contributed by SZTAKI

FFTW

Section contributed by IPB

The Fastest Fourier Transform in the West (FFTW) is a comprehensive collection of C routines for computing the discrete Fourier transform (DFT) and its various special cases. Detail description of FFTW features is available at FFTW official web site (www.fftw.org) and at HP-SEE wiki Software Stack and Technology Watch / Library (http://hpseewiki.ipb.ac.rs/index.php/Libraries).

Within the NUQG application, FFTW library is used for complex one-dimensional and multi-dimensional DFTs. It is used in serial, but also in OpenMP and MPI parallel mode, as well as in hybrid OpenMP/MPI mode. Here is described NUQG FFTW serial and OpenMP usage in 1D, 2D, and 3D, while MPI and hybrid OpenMP/MPI FFTW implementation in NUQG is illustrated in the Hybrid programming and Distributed memory sections respectively.

The following pseudo-codes shows FFTW3 serial usage in 1D, 2D, and 3D. The codes have to be linked with the fftw3 library -lfftw3 -lm on Unix systems. In one-dimensional DFT pseudo-code, Nx is the size of the transform. In multi-dimensional pseudo-codes Nx and Ny define matrix size in two-dimensional transform, and Nx, Ny, Nz define tensor size in 3D transform. FFTW_FORWARD and FFTW_BACKWARD in plan definition indicate the direction of the transform. Once the plan has been created, it can be used as many times as you like for transforms on the specified in/out arrays, computing the actual transforms via fftw_execute(plan). The plan is deallocated by fftw_destroy_plan(plan) function. Computing the forward transform followed by the backward transform (or vice versa) yields the original array scaled by the size of the array Nx, size of matrix Nx * Ny, and size of tensor Nx * Ny * Nz, so it's necessary to rescale the data after the transformation.

// FFTW3 serial usage in 1D

#include <fftw3.h>

int main(int argc, char **argv) {

long cnti;

long Nx;

fftw_complex *array;

fftw_plan plan_forward, plan_backward;

array = ((fftw_complex *) fftw_malloc(sizeof(fftw_complex) * Nx));

plan_forward = fftw_plan_dft_1d(Nx, array, array, FFTW_FORWARD, FFTW_ESTIMATE);

plan_backward = fftw_plan_dft_1d(Nx, array, array, FFTW_BACKWARD, FFTW_ESTIMATE);

fftw_execute(plan_forward);

fftw_execute(plan_backward);

for(cnti = 0; cnti < Nx; cnti ++) {

array[cnti][0] /= Nx;

array[cnti][1] /= Nx;

}

fftw_destroy_plan(plan_forward);

fftw_destroy_plan(plan_backward);

fftw_free(array);

}

// FFTW3 serial usage in 2D

#include <fftw3.h>

int main(int argc, char **argv) {

long cnti;

long Nx, Ny;

fftw_complex *array;

fftw_plan plan_forward, plan_backward;

array = ((fftw_complex *) fftw_malloc(sizeof(fftw_complex) * Nx * Ny));

plan_forward = fftw_plan_dft_2d(Nx, Ny, array, array, FFTW_FORWARD, FFTW_ESTIMATE);

plan_backward = fftw_plan_dft_2d(Nx, Ny, array, array, FFTW_BACKWARD, FFTW_ESTIMATE);

fftw_execute(plan_forward);

fftw_execute(plan_backward);

for(cnti = 0; cnti < Nx * Ny; cnti ++) {

array[cnti][0] /= Nx * Ny;

array[cnti][1] /= Nx * Ny;

}

fftw_destroy_plan(plan_forward);

fftw_destroy_plan(plan_backward);

fftw_free(array);

}

// FFTW3 serial usage in 3D

#include <fftw3.h>

int main(int argc, char **argv) {

long cnti;

long Nx, Ny, Nz;

fftw_complex *array;

fftw_plan plan_forward, plan_backward;

array = ((fftw_complex *) fftw_malloc(sizeof(fftw_complex) * Nx * Ny * Nz));

plan_forward = fftw_plan_dft_3d(Nx, Ny, Nz, array, array, FFTW_FORWARD, FFTW_ESTIMATE);

plan_backward = fftw_plan_dft_3d(Nx, Ny, Nz, array, array, FFTW_BACKWARD, FFTW_ESTIMATE);

fftw_execute(plan_forward);

fftw_execute(plan_backward);

for(cnti = 0; cnti < Nx * Ny * Nz; cnti ++) {

array[cnti][0] /= Nx * Ny * Nz;

array[cnti][1] /= Nx * Ny * Nz;

}

fftw_destroy_plan(plan_forward);

fftw_destroy_plan(plan_backward);

fftw_free(array);

}

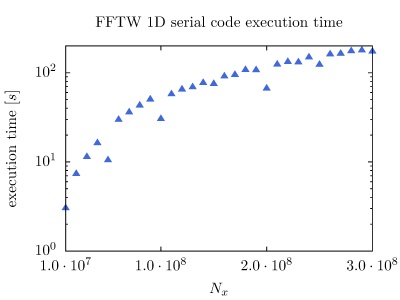

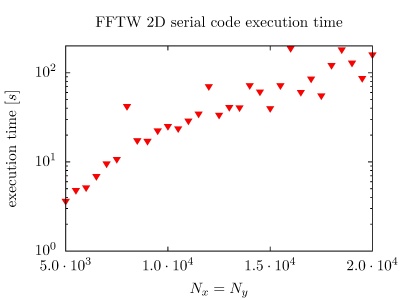

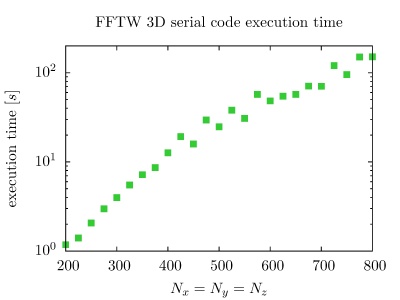

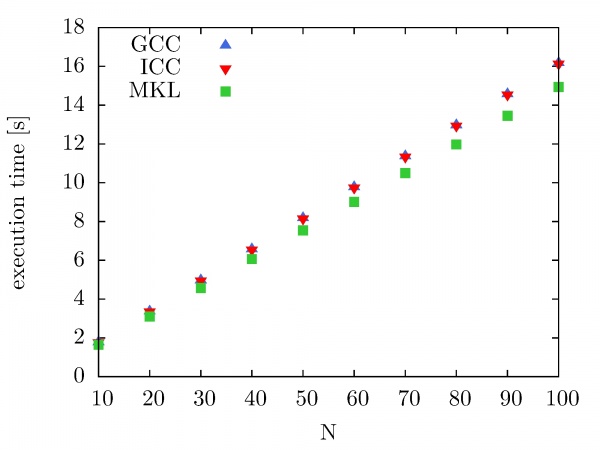

On PARADOX cluster the NUQG application uses FFTW library version 3.3.1. The execution times of the application in 1D, 2D, and 3D as a function of array size, matrix size, and tensor size are given in the figure below.

The next set of pseudo-code gives overview of FFTW OpenMP usage in 1D, 2D, and 3D. Applications using the parallel complex transforms should be linked with -lfftw3_threads -lfftw3 -lm, or -lfftw3_omp -lfftw3 -lm if the program is compiled with OpenMP. It is also needed to link with whatever library is responsible for threads on your system (e.g. -lpthread on GNU/Linux) or include whatever compiler flag enables OpenMP (e.g. -fopenmp with gcc). Before calling any FFTW routines fftw_init_threads() function has to be called. This function, which need only be called once, performs any one-time initialization required to use threads on the system. It returns zero if there was some error and a non-zero value otherwise.

Before creating a plan FFTW3 multi-threaded usage require call fftw_plan_with_nthreads(int nthreads). The nthreads argument indicates the number of threads that will be used by FFTW. All plans subsequently created with any planner routine will use that many threads. Plans already created before a call to fftw_plan_with_nthreads are unaffected. If nthreads is equal to 1 (the default), threads are disabled for subsequent plans.

With OpenMP, to configure FFTW to use all of the currently running OpenMP threads (set by omp_set_num_threads(nthreads) or by the OMP_NUM_THREADS environment variable) the following line can be used fftw_plan_with_nthreads(omp_get_num_threads()). The 'omp_' OpenMP functions are declared via #include <omp.h>.

Given a plan, might be executed as before with fftw_execute(plan), and the execution will use the number of threads specified when the plan was created. The plan can be destroyed as it was in serial code with fftw_destroy_plan, while the threads could be deallocated by function fftw_cleanup_threads(void).

// FFTW3 multi-threaded usage in 1D

#include <fftw3.h>

int main(int argc, char **argv) {

long cnti;

long Nx;

fftw_complex *array;

fftw_plan plan_forward, plan_backward;

array = ((fftw_complex *) fftw_malloc(sizeof(fftw_complex) * Nx));

fftw_init_threads();

fftw_plan_with_nthreads(omp_get_max_threads());

plan_forward = fftw_plan_dft_1d(Nx, array, array, FFTW_FORWARD, FFTW_ESTIMATE);

plan_backward = fftw_plan_dft_1d(Nx, array, array, FFTW_BACKWARD, FFTW_ESTIMATE);

fftw_execute(plan_forward);

fftw_execute(plan_backward);

for(cnti = 0; cnti < Nx; cnti ++) {

array[cnti][0] /= Nx;

array[cnti][1] /= Nx;

}

fftw_destroy_plan(plan_forward);

fftw_destroy_plan(plan_backward);

fftw_cleanup_threads();

fftw_free(array);

}

// FFTW3 multi-threaded usage in 2D

#include <fftw3.h>

int main(int argc, char **argv) {

long cnti;

long Nx, Ny;

fftw_complex *array;

fftw_plan plan_forward, plan_backward;

array = ((fftw_complex *) fftw_malloc(sizeof(fftw_complex) * Nx * Ny));

fftw_init_threads();

fftw_plan_with_nthreads(omp_get_max_threads());

plan_forward = fftw_plan_dft_2d(Nx, Ny, array, array, FFTW_FORWARD, FFTW_ESTIMATE);

plan_backward = fftw_plan_dft_2d(Nx, Ny, array, array, FFTW_BACKWARD, FFTW_ESTIMATE);

fftw_execute(plan_forward);

fftw_execute(plan_backward);

for(cnti = 0; cnti < Nx * Ny; cnti ++) {

array[cnti][0] /= Nx * Ny;

array[cnti][1] /= Nx * Ny;

}

fftw_destroy_plan(plan_forward);

fftw_destroy_plan(plan_backward);

fftw_cleanup_threads();

fftw_free(array);

}

// FFTW3 multi-threaded usage in 3D

#include <fftw3.h>

int main(int argc, char **argv) {

long cnti;

long Nx, Ny, Nz;

fftw_complex *array;

fftw_plan plan_forward, plan_backward;

array = ((fftw_complex *) fftw_malloc(sizeof(fftw_complex) * Nx * Ny * Nz));

fftw_init_threads();

fftw_plan_with_nthreads(omp_get_max_threads());

plan_forward = fftw_plan_dft_3d(Nx, Ny, Nz, array, array, FFTW_FORWARD, FFTW_ESTIMATE);

plan_backward = fftw_plan_dft_3d(Nx, Ny, Nz, array, array, FFTW_BACKWARD, FFTW_ESTIMATE);

fftw_execute(plan_forward);

fftw_execute(plan_backward);

for(cnti = 0; cnti < Nx * Ny * Nz; cnti ++) {

array[cnti][0] /= Nx * Ny * Nz;

array[cnti][1] /= Nx * Ny * Nz;

}

fftw_destroy_plan(plan_forward);

fftw_destroy_plan(plan_backward);

fftw_cleanup_threads();

fftw_free(array);

}

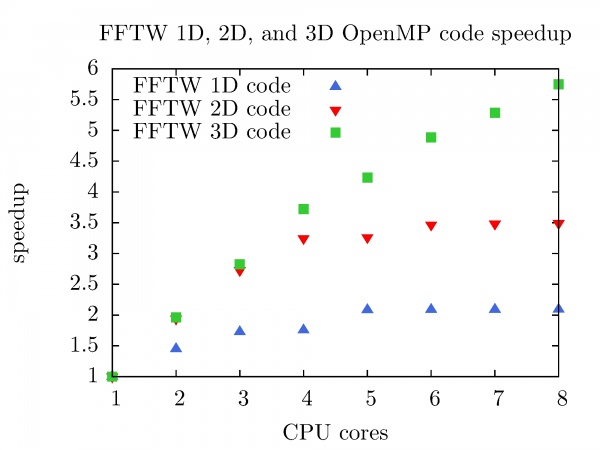

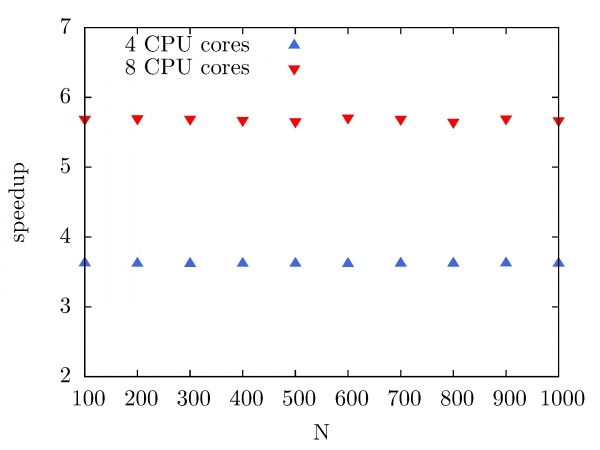

The speedup of FFTW OpenMP code in 1D, 2D, and 3D as a function of number of CPU cores is given in the figure below. FFTW 1D code (Nx = 3x10^8) at PARADOX cluster with 8 CPU cores achieves speedup of 2.1. Better speedup is achieved with FFTW 2D code (Nx = Ny = 2 x 10^4) and FFTW 3D code (Nx = Ny = Nz = 800), 3.5 and 5.8 respectively. It's worth to mention that speedup of FFTW 3D code with 8 CPU cores of PARADOX cluster is achieved by Intel MKL FFTW library with FFTW 1D code.

Intel MKL

Section contributed by IPB

Intel Math Kernel Library (Intel MKL) (http://software.intel.com/en-us/articles/intel-mkl/) is a library of optimized, extensively threaded mathematical routines for solving large scientific and engineering computational problems. The library supports C/C++ and Fortran language APIs. The most of Intel MKL functions are threaded, which enables more efficient usage of today’s multi-core processors and easier parallelization of application or implementation of a hybrid programming model. Threading is performed through OpenMP, and the following mathematical domains are supported:

- Sparse Linear Algebra – Sparse BLAS, Sparse Format Converters, PARDISO Direct Sparse Solver, Iterative Sparse Solvers and Pre-conditioners

- Fast Fourier Transforms

- Optimized LINPACK benchmark

- Vector Math Library

- Statistics Functions – Vector Random Number Generators, Summary Statistics Library

- Cluster Support – ScaLAPACK, Cluster FFT

The NUQG application uses Intel MKL Fast Fourier Transform and Trigonometric Transform functionality. The FFTW3 wrappers are a set of functions and data structures, depending on each other. The wrappers are not designed to provide the interface on a function-per-function basis, and some of the wrapper functions are empty and do nothing, but they are present to avoid linking errors and satisfy function calls.

The FFTW3 wrappers provide equivalent implementation for double- and single-precision functions (those with prefixes fftw_ and fftwf_, respectively). The FFTW3 interface provided by the wrappers is defined in the header files fftw3.h and fftw3.f. These files are identical to the ones from the FFTW3.2 package, and are included in the distribution of Intel MKL. Additionally, the files fftw3_mkl.h, fftw3_mkl.f, and fftw3_mkl_f77.h define supporting structures, supplementary constants and macros, and expose Fortran interface in C.

A function call for creation of an FFT plan may return a NULL plan, indicating that the functionality is not supported. Therefore, it is recommended to carefully check the result returned by a plan creation function within the application. In particular, the following problems return a NULL plan:

-

c2randr2cproblems with a split storage of complex data. -

r2rproblems with kind valuesFFTW_R2HC,FFTW_HC2R, andFFTW_DHT. - Multidimensional

r2rtransforms. - Transforms of multidimensional vectors.

- Multidimensional transforms with rank >

MKL_MAXRANK.

The new value MKL_RODFT00 is introduced for the kind parameter by the FFTW3 wrappers. For better performance, it is strongly suggested to use this value, rather than the FFTW_RODFT00:

plan1 = fftw_plan_r2r_1d(n, in1, out1, FFTW_RODFT00, FFTW_ESTIMATE); plan2 = fftw_plan_r2r_1d(n, in2, out2, MKL_RODFT00, FFTW_ESTIMATE);

The flags parameter in plan creation functions is always ignored. The same algorithm is used regardless of the value of this parameter. In particular, flags values FFTW_ESTIMATE, FFTW_MEASURE, etc. have no effect.

For multithreaded plans, the normal sequence of calls to the fftw_init_threads() and fftw_plan_with_nthreads() functions can be used.

FFTW3 wrappers are not fully thread-safe. If the new-array execute functions, such as fftw_execute_dft(), share the same plan from parallel user threads, we suggest to set the number of the sharing threads before creation of the plan. For this purpose, the FFTW3 wrappers provide a header file fftw3_mkl.h, which defines a global structure fftw3_mkl with a field to be set to the number of sharing threads. Below is an example of setting the number of sharing threads:

#include "fftw3.h" #include "fftw3_mkl.h" fftw3_mkl.number_of_user_threads = 4; plan = fftw_plan_dft(...);

The wrappers typically indicate a problem by returning a NULL plan. In few cases, the wrappers may report a descriptive message of the problem detected. By default, the reporting is turned off. To turn it on, one needs to set a variable fftw3_mkl.verbose to the non-zero value, for example:

#include "fftw3.h" #include "fftw3_mkl.h" fftw3_mkl.verbose = 0; plan = fftw_plan_r2r(...);

Intel MKL Fast Fourier Transform and Trigonometric Transform functionality is used by NUQG and tested on the PARADOX cluster. First, we have compared execution times of serial codes that use FFTW3 3.1.2 library compiled with the GCC compiler, compiled with the ICC compiler, as well as FFTW library provided by Intel MKL 10.2.3. The execution times are shown in the figure below as a function of a number of performed forward and backward DFT transforms. The size of the data array is 10^7. As we can see, there is no significant improvement in performance when the FFTW3 3.1.2 library is compiled with ICC instead of GCC. However, some improvement in performance is achieved when Intel MKL FFTW library is used.

The following figure illustrates the speedup of Intel MKL Fast Fourier Transform library at PARADOX cluster as a function of number of cores. In this case, the same data array size is used (10^7), while the number of performed forward and backward DFT transforms have been changed from 100 to 1000 with step of 100. As it is illustrated, the speedup of Intel MKL FFTW library at PARADOX cluster is 3.8 with 4 CPU cores, and 5.8 with 8 CPU cores.

SPRNG

Section contributed by IPB

Pseudorandom number generation algorithms are key components in many modern scientific computing applications and the quality of the obtained research results often critically depends on their characteristics. The random number generating algorithms should be of sufficiently high quality, so as to ensure that numerical results give unbiased and reliable estimates of physical quantities. SPRNG (Scalable Parallel Random Number Generators) is one of the best libraries for generating pseudorandom numbers for parallel applications. Detail description of SPRNG features is available at the official web page (sprng.fsu.edu) and at the HP-SEE Wiki Software Stack and Technology Watch / Library (http://hpseewiki.ipb.ac.rs/index.php/Libraries) section.

Two versions of the SPRNG library are available on the PARADOX cluster: SPRNG 2.0 and SPRNG 4.0. The NUQG application uses SPRNG 4.0 library, both in the serial and in the MPI mode for the Monte Carlo calculation of path integrals of a generic quantum mechanical theory. Typical usage in the serial mode is illustrated with the following pseudo-code:

#include <sprng_cpp.h>

#define SEED 12345

int main()

{

int streamnum, nstreams;

double rn;

int irn;

Sprng * stream;

streamnum = 0;

nstreams = 1;

stream = SelectType(SPRNG_CMRG);

stream->init_sprng(streamnum, nstreams, SEED, SPRNG_DEFAULT);

// Generate a double precision random number

rn = stream->sprng();

// Generate an integer random number

irn = stream->isprng();

// Free memory used to store stream state

ptr->free_sprng();

}

The SPRNG MPI usage is illustrated with the following pseudo-code:

#include <sprng_cpp.h>

#define SEED 12345

int main(int argc, char *argv[])

{

int streamnum, nstreams;

Sprng *stream;

double rn;

int myid, nprocs;

// Initialize MPI

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &myid);

MPI_Comm_size(MPI_COMM_WORLD, &nprocs);

streamnum = myid;

// One stream per processor

nstreams = nprocs;

//node 0 is reading in a generator type

stream = SelectType(SPRNG_CMRG);

// Initialize stream

stream->init_sprng(streamnum,nstreams,SEED,SPRNG_DEFAULT);

// Generate double precision random number

rn = stream->sprng();

// Free memory used to store stream state

stream->free_sprng();

// Terminate MPI

MPI_Finalize();

}

The NUQG Monte Carlo (MC) algorithm uses SPRNG streams independently, with almost no interprocess communication, and therefore the scaling of MPI NUQG MC algorithm with the number of processors is perfectly linear.

CPMD

Section contributed by IPB

The CPMD code is a parallelized plane-wave/pseudopotential implementation of the Density Functional Theory (DFT), particularly designed for ab-initio molecular dynamics. CPMD runs on a large variety of different computer architectures and is optimized to run on either (super-)scalar or vector CPUs. General description of CPMD and the list of features are available at the official web site (cpmd.org) and at the HP-SEE Wiki Software Stack and Technology Watch / Library section http://hpseewiki.ipb.ac.rs/index.php/CPMD.

CPMD is ported on the PARADOX cluster with MPI parallelization. The application is fully functional and can be started with the following command:

[dusan@ui ~]$ cpmd.x file.in [PP_path] > file.out

where file.in is an input file and [PP_path] is the location of pseudopotential files for all atomic species specified in the file.in input file.

The path to the pseudopotential library can be given in different ways:

- By setting the second command line argument

[PP_path]. - If the second command line argument

[PP_path]is not given, the program checks the environment variablesCPMD_PP_LIBRARY_PATHandPP_LIBRARY_PATH. - If neither the environment variables nor the second command line argument are set, the program assumes that the pseudopotential files reside in the current directory.

During the run, cpmd.x creates different outputs:

- Various status messages to monitor the correct operation of the program is printed to standard output (in our case, redirected to the file

file.out).

- Detailed data are written into different files (depending on the keywords specified in the input

file.in). Large files are written either to the current directory, the directory specified by the environment variableCPMD_FILEPATH, or the directory specified in the input file using the keywordFILEPATH.

The CPMD official web site provides several CPMD introduction guides:

- Wavefunction Optimization Example - demonstrate some of the basic steps of performing a CPMD calculation with a very simple molecule: hydrogen, and a very simple task: calculate the electronic structure.

http://cpmd.org/cpmd-tutorial/the-basics-running-cpmd-input-and-output-formats

- Geometry Optimization and MD Example - inspect how to perform a geometry optimization and how to setup and analyze a Car-Parrinello MD.

http://cpmd.org/cpmd-tutorial/the-basics-of-cpmd-geometry-optimization-and-md

- Electron Structure and Geometry Optimization Example - gives three exercises (Hydrogen molecule, Water and Ammonia) to practice with the basics of CPMD.

http://cpmd.org/cpmd-tutorial/exercise-1-electron-structure-and-geometry-optimization

- Car-Parrinello MD Example - explain how to setup a Car-Parrinello Molecular Dynamics run.

http://cpmd.org/cpmd-tutorial/exercise-2-car-parrinello-md

- Bulk Systems Example - look into more complex systems like bulk materials.

http://cpmd.org/cpmd-tutorial/exercise-3-bulk-systems

- Determination of Dynamical Properties Example - is a collection of some examples on how to determine and tune dynamical properties in CPMD.

http://cpmd.org/cpmd-tutorial/exercise-4-determination-of-dynamical-properties

- Proton transfer in an Enzyme Example - shows how to setup/run a model system for a real-life simulation.

http://cpmd.org/cpmd-tutorial/exercise-5-triade

GAMESS

Section contributed by IICT-BAS

In order to explore the capabilities of the free quantum-chemical software Firefly PCGamess, appropriate purely organic molecules were chosen, with the aid, on a later stage the program to be applied to metal-containing systems, like cisplatin analogues. The work done has thus an important methodological significance. Molecular mechanics conformational search for the two species was performed, and the global minimum-energy conformations thus obtained, were further optimized at HF ab initio (3-21G** basis set, Firefly PCGamess) level of theory. In both cases, specific, scorpion-like conformations are realized, with hydrogen bonds involving the guanidino-group and the phenolic hydroxyl. The application was porting to MPI using GAMESS application software on the HPCG cluster at IICT-BAS. A lot of MPI tests were done up to 32 cores to optimize the application. A good parallel efficiency is obtained up to 32 cores on HPCG cluster. Some problems were solved considered different versions of Gamess software application. All tasks using Gamess are compiled on HPCG cluster with licensed Intel MKL (Math Kernel Library) compiler.

libtiff

Section contributed by UVT

FuzzyCmeans used a special library, libtiff, to deal with the digital images involved in the computational process. Libtiff (http://www.libtiff.org/) was compiled for BG/P@UVT and InfraGRID cluster without any specific optimizations.

The workflow dealing with satellite images (most of the time in TIFF format) using libtiff library is the following:

1. open the TIFF image in memory

TIFF* image;

if((image=TIFFOpen(name , "w")) == NULL)

{

printf("Error open the output file");

return -1;

}

2. read image header and set some global variables for later use (image coordinates, image size, image bands etc.)

TIFFGetField(image, TIFFTAG_BITSPERSAMPLE, BPS); TIFFGetField(image, TIFFTAG_SAMPLESPERPIXEL, SPP); TIFFGetField(image, TIFFTAG_ROWSPERSTRIP, RPS); TIFFGetField(image, TIFFTAG_XRESOLUTION, XR); TIFFGetField(image, TIFFTAG_YRESOLUTION, YR); TIFFGetField(image, TIFFTAG_RESOLUTIONUNIT, RuN); TIFFGetField(image,TIFFTAG_IMAGEWIDTH, width); TIFFGetField(image,TIFFTAG_IMAGELENGTH, height);

3. read image values (define a structure that can hold the entire image, read each pixel value and assign it to the tiff structure)

uint32 *raster= (uint32*) _TIFFmalloc(width*height*sizeof(uint32));

// read the entire image

if(TIFFReadRGBAImageOriented(image, width, height, raster, ORIENTATION_TOPLEFT, 1))

{

// process the raster to compute the image

}

4. when all computations are done then write the virtual image from memory on the disk and free up memory

char *buffer;

TIFF* image;

buffer=malloc(width*height*sizeof(char));

// open a new file on disk for writting

if((image=TIFFOpen(name , "w")) == NULL)

{

printf("Error open the output file");

return -1;

}

// set initial TIFF headers

TIFFSetField(image,TIFFTAG_IMAGEWIDTH, width);

TIFFSetField(image,TIFFTAG_IMAGELENGTH, height);

TIFFSetField(image, TIFFTAG_BITSPERSAMPLE, 8);

TIFFSetField(image, TIFFTAG_SAMPLESPERPIXEL, 1);

TIFFSetField(image, TIFFTAG_ROWSPERSTRIP, TIFFDefaultStripSize(image, 0));

TIFFSetField(image, TIFFTAG_PHOTOMETRIC, PHOTOMETRIC_MINISWHITE);

TIFFSetField(image, TIFFTAG_FILLORDER, FILLORDER_MSB2LSB);

TIFFSetField(image, TIFFTAG_PLANARCONFIG, PLANARCONFIG_CONTIG);

TIFFSetField(image, TIFFTAG_XRESOLUTION, XR);

TIFFSetField(image, TIFFTAG_YRESOLUTION, YR);

TIFFSetField(image, TIFFTAG_RESOLUTIONUNIT, RuN);

// For each pixel find the closest center and set the corresponding gray level in the output image

for(i=0;i<height*width;i++) {

// fill the buffer with gray level values

…

Buffer[i] = value;

…

}

// write values to the file

for(i=0;i<height;i++)

{

TIFFWriteScanline(image,&buffer[i*width], i,0);

}

TIFFClose(image);

The snippets presented are only parts of the entire program and must be used as an usage example. For more details please consult the library API.