Technology Watch

From HP-SEE Wiki

Contents

|

Technology watch methodology

Introduction

The task of the Technology Watch is to observe, track, filter out and assess potential technologies from a very wide field extending beyond the normal confines of the sector. A Technology Watch process can be broken down into four main phases: a needs audit, data collection, processing of the data collected and integration and dissemination of the results. The Technology Watch process must be capable of identifying any scientific or technical innovation with potential to create opportunities or avoid threats.

Objectives of technology watch

Study and follow the latest trends and advances in technology both in terms of software and programming as well as in terms of hardware and procurement. On the one hand, new architectures and system designs are constantly released by the vendors of top systems, some more suited for particular user communities than others. On the other hand, new software technologies and paradigms, like cloud computing, general purpose GPU computing, etc. emerge from research or industrial efforts. This leads to a naturally heterogeneous distributed HPC infrastructure, and WP8 will establish permanent technology watch to cater for regional user needs. To facilitate this, the project will develop links with PRACE, the industry, and follow the advances in research and developments of HPC systems via scientific conferences and journals.

Technological areas

- Software

- Hardware

Methodology

- Organize meetings with vendors

Since the beginning of the project several 1 hour meetings have been organized with vendors and appended to the regular PSC meetings of the consortium. This is to continue in the remaining of the project.

- Ask vendors to fill our survey

This questionnaire is intended to help polling HPC hardware vendors to determine the most important technical trends that they sense in the HPC area. These trends are accumulated, analyzed and then synthesized into an uniform report. The polls will be repeated periodically in order to follow up the most relevant novelties that might impact the near future of HPC.

Vendor survey URL: http://survey.ipb.ac.rs/index.php?sid=82412&lang=en

- Collect know-hows from other projects

The public deliverables of relevant projects (DEISA, PRACE, EGI, EMI, etc.) may content useful information for our work packages.

- Participate on SC/ISC conferences and write digest about the new technical solutions which has been discovered. These summaries should be created about those technological areas which have been defined

- The evaluation and dissemination of the new technologies can be done by using the hp-see-tech@lists.hp-see.eu mailing list and http://hpseewiki.ipb.ac.rs/index.php/Technology_Watch wiki page

- Liaise with PRACE AISBL (Association International Sans But Lucratif) and include in the related MoU the possibility access the technology watch information that is generated within PRACE

Implementation plan

Involved in this work

- IICT-BAS

- IPB

- GRNET

- NIIFI (Gabor Roczei coordinates this task)

- IFIN-HH

Action plan

| ID | Task | Responsible partner | State | Deadline |

|---|---|---|---|---|

| 1 | Participate on SC 2012 conference (10-16 November 2012, Salt Lake City, Utah) and create technical digest | Gabor Roczei (NIIFI) | NEW | M27 (November 2012) |

| 2 | Participate on ISC 2012 conference (17-21 June 2012, Hamburg, Germany) and create technical digest | Dusan Vudragovic (IPB) | DONE | M22 (June 2012) |

| 3 | Participate on the PRACE WP9 Future Technologies Workshop and create technical digest | Vladimir Slavnic (IPB) | DONE | M20 (April 2012) |

| 4 | Technology watch in this area: Tools and libraries | Branko Marovic (IPB, RCUB), Emanouil Atanassov (IICT-BAS), Mihnea Dulea (IFIN-HH) | IN PROGRESS | M35 (July 2013) |

| 5 | Technology watch in this area: New programming languages and models | Mihnea Dulea (IFIN-HH), Ioannis Liabotis (GRNET), Branko Marovic (IPB, RCUB) | IN PROGRESS | M35 (July 2013) |

| 6 | Technology watch in this area: System software, middleware and programming environments | Gabor Roczei (NIIFI), Emanouil Atanassov (IICT-BAS) | IN PROGRESS | M35 (July 2013) |

| 7 | Technology watch in this area: Green HPC (energy efficiency, cooling) | Emanouil Atanassov (IICT-BAS) | IN PROGRESS | M35 (July 2013) |

| 8 | Technology watch in this area: Processor architectures | Ioannis Liabotis (GRNET), Mihnea Dulea (IFIN-HH), Branko Marovic (IPB, RCUB) | IN PROGRESS | M35 (July 2013) |

| 9 | Technology watch in this area: Interconnect technologies | Emanouil Atanassov (IICT-BAS), Branko Marovic (IPB, RCUB) | IN PROGRESS | M35 (July 2013) |

| 10 | Technology watch in this area: Memory and storage units | Mihnea Dulea (IFIN-HH), Gabor Roczei (NIIFI) | IN PROGRESS | M35 (July 2013) |

| 11 | Participate on the 7th International Workshop on Parallel Matrix Algorithms and Applications and create technical digest | Todor Gurov (IICT-BAS) | DONE | M22(June 2012) |

| 12 | Finalize the MoU with PRACE which include the PRACE technology watch access | Ioannis Liabotis (GRNET) | IN PROGRESS | M22 (June 2012) |

| 13 | Participate on the 11th International Symposium on Parallel and Distributed Computing - ISPDC 2012, in conjunction with MAC Summer Workshop and create technical digest | Todor Gurov (IICT-BAS) | DONE | M22 (June 2012) |

Time plan until the end of the project

- Plans for 2012

- Organize meetings with vendors ask them to fill our survey

- Creation of technical digests about the visited conferences

- Finalize PRACE MoU trying to include the technology watch task

- Plans for 2013

- Organize meetings with vendors ask them to fill our survey

- Creation of technical digests about the visited conferences

- D8.3 Permanent technology watch report (delivery date: M35, July 2013)

- Responsible: Emanouil Atanassov (IICT-BAS)

Conclusions

The technology watch is a complex task where we need to follow up several technological areas. These has been described above. If somebody will participate on SC/ISC conferences then he/she need to write a technical digest about the new technical solutions which have been discovered and these summaries should be created about those technological areas which have been defined. The dissemination can be done by using the hp-see-tech mailing list and HP-SEE wiki. The new HPC trends will be collected from the vendors over that survey which has been created for this purpose.

Collected materials

Other projects

- Partnership for Advanced Computing in Europe (PRACE)

- Website: http://www.prace-ri.eu

- The deliverable documents of PRACE can be found here

- D2.6.1 Operational model analysis and initial specifications

- D3.3.1 Survey of HPC education and training needs

- Identification and categorisation of applications and initial benchmarks suite

- D6.2.2 Final report on application requirements

- D6.3.1 Report on available performance analysis and benchmark tools, representative Benchmark

- D7.2 Report on systems compliant with user requirements

- Distributed European Infrastructure for Supercomputing Applications (DEISA)

- Website: http://www.deisa.eu/

- The deliverable documents of DEISA can be found here

- Planning Training Activities D2-3.1

- Planning Training Activities D2-3.2

- Technologies D4.1

- Applications Enabling D5.1

- Applications Enabling D5.2

- Environment and User Related Application Support D6.1

- Environment and User Related Application Support D6.2

- DEISA Benchmark Suite D7-2.1

- DEISA Benchmark Suite D7-2.2

- Enhancing Scalability D9.1

- Operations D3-1.2

- Integration of First Associate Partner D3-2.1

- Assessment and Implementation Plan of the DEISA Development Environment D8.1

- DEISA Common Production Environment

- European Middleware Initiative (EMI)

- Website: http://www.eu-emi.eu/

- Public deliverables

- European Grid Infrastructure (EGI)

- Website: http://www.egi.eu/

- Public deliverables

Technical digests about the visited conferences

NWS 2012

Networkshop 2012, 11th April 2012, Veszprém, Hungary

- Website: http://nws.niif.hu/nws2012

- Responsible partner: NIIFI

Technical digest:

Two vendors (SGI, IBM) gave presentation about their new developments. The SGI introduced the ICE X and Prism XL and IBM demonstrated the iDataPlex DX360 M4 product. Here are some information about them:

SGI ICE X:

- Intel Xeon Processor E5-2600

- 5 times processing power density

- 53 Tflops/rack

- FDR Infiniband

- Scalable to 1000s of nodes

- Variable corresponding cooling systems (cooling via air, cold water and even warm water)

- Less cables

- Two 2-socket nodes tilted face to face

- Closed loop airflow + cellular cooling

More details: http://www.sgi.com/products/servers/ice/x/

SGI Prism XL:

- GPU system

- Petaflop in a cabinet

- Supports NVIDIA and AMD GPU-based accelerators as well as Tilera's TileEncore cards

- Cuda, OpenCL, and TIlera MDE programming environments can be used

- AMD Opteron 4100 series, two per stick

More details: http://www.sgi.com/products/servers/prism_xl/

IBM iDataPlex DX360 M4:

- Water cooling door at the back of the rack

- Warm water cooling

- Up to 256GB memory per server

- Intel Xeon E5-2600 Series processors

- Hot-swap and redundant power supplies

- Two Gen-III PCIe Slots plus slotless 10GbE or QDR/FDR10 Infiniband

More details: http://www-03.ibm.com/systems/x/hardware/rack/dx360m4/index.html

7th International Workshop on Parallel Matrix Algorithms and Applications

7th International Workshop on Parallel Matrix Algorithms and Applications (PMAA 2012) 28-30 June 2012, Birkbeck University of London, UK

- Website: http://www.dcs.bbk.ac.uk/pmaa2012/

- Responsible partner: IICT-BAS

Technical digest:

The topics of this workshop are relevant to the HP-SEE project and the technology watch not only because it was a forum for an exchange of ideas, insights and experiences in different areas of parallel computing (Multicore, Manycores and GPU) but also because of the well presented stream devoted to Energy aware performance metrics.

Recent years have seen a dramatic change in core technology. Voltage and thus frequency scaling has stopped. Thus, to continue the exponential overall improvements technologies have turned into multi-core chips and parallelism at all scales. With these new trends, a series of new problems arise: how to program such complex machines and how to keep pace with the very fast increase in power requirements. In his invited presentations Dr. Becas (IBM) concentrated on the latter, and the focal point of his recent research was the energy aware performance metrics. It was demonstrated that traditional energy aware performance metrics that are directly derived from the old Flop/sec performance metric have serious shortcomings and can potentially give a completely different picture reality. Instead, it was shown that by optimizing functions of time to solution and energy at the sae time, one can get a much more clear picture. This immediately implies a change in the way we gauge performance of computing systems: we need to abandon the single benchmark, and rather opt for a set of benchmarks, that ae basic kernels with widely different characteristics.

The 11th International Symposium on Parallel and Distributed Computing

The 11th International Symposium on Parallel and Distributed Computing - ISPDC 2012, in conjunction with MAC Summer Workshop 2012, June 25-29, 2012, Leibniz Supercomputing Centre, Munich, Germany

- Website: http://ispdc.cie.bv.tum.de/

- Responsible partner: IICT-BAS

Technical digest:

The topics of the workshop were highly relevant to the HP-SEE project and the technology watch, encompassing issues of hardware, middleware and application software developments. The most important presentations, regarding deployment of advanced hardware in Europe HPC centers, were given by Arndt Bode, chairman of the Board of Directors of Leibniz Supercomputing Centre (LRZ) of the Bavarian Academy of Sciences and Humanities, Germany, “Energy efficient supercomputing with SuperMUC” and Wolfgang E. Nagel, Director of the Center for Information Services and High Performance Computing (ZIH), Dresden, Germany, “Petascale-Computing: What have we learned, and why do we have to do something?”

We point out that the plans for the expansion of the Bulgarian supercomputer center also include the procurement of a BlueGene Q system in the future. Many talks presented new advanced methods for software development for the new hardware architectures. For example, the talk by David I. Ketcheson, Assistant Professor, Applied Mathematics, King Abdullah University of Science and Technology, Saudi-Arabia: PyClaw: making a legacy code accessible and parallel with Python presented a general hyperbolic PDE solver that is easy to operate yet achieves efficiency near that of hand-coded Fortran and scales to the largest supercomputers, using Python for most of the code while employing automatically-wrapped Fortran kernels for computationally intensive routines. Several talks presented other developments regarding the use of python in HPC environments.

Another area of active developments is the use of accelerators and more specifically GPUs. Many such talks were presented. We outline the talk by Rio Yokota, Extreme Computing Research Group, KAUST Supercomputing Lab, King Abdullah University of Science and Technology, Saudi-Arabia on Petascale Fast Multipole Methods on GPUs.

Issues and problems and the ways to approach their resolution, regarding the operations of a distributed HPC infrastructure in Europe, were presented by Achim Streit, Director of Steinbuch Centre for Computing (SCC), Karlsruhe Institute of Technology (KIT), Germany, in his talk “Distributed Computing in Germany and Europe and its Future”.

Where he emphasized the suitability of UNICORE middleware. Several talks presented approaches that combine HPC and Cloud usage. A representative from Matlab demonstrated the ease of use of cloud resources from matlab prompt. Another interesting cloud platform was presented by Miriam Schmidberger and Markus Schmidberger: Software Enginee-ring as a Service for HPC, where a prototype platform was presented and a call for testing it and gathering of user / operator requirements was opened, so that HP-SEE project can consider such option.

PRACE 1IP WP9 Workshop

PRACE 1IP Work Package 9 Future Technologies Workshop

- Website: https://eventbooking.stfc.ac.uk/news-events/prace--1ip-work-package-9-future-technologies-workshop

- Responsible partner: IPB

Technical digest:

The workshop held in Daresbury, UK, included presentations from PRACE (http://www.prace-ri.eu/) members on the latest progress on work in Work Package 9 tasks, and presentations from external speakers on technology developments key to the delivery of HPC systems in 2014 and beyond.

Software

Tools and libraries

- The talk about the advanced debugging techniques “Debugging for task-based programming models” covered new concepts in debugging parallel, task-based applications with Temanejo tool. More information about Temanejo can be found at http://temanejo.wordpress.com/

- Presentation about Enabling MPI communication between GPU devices using MVAPICH2-GPU summarized CSCS WP 9.2.C efforts, which included deployment and evaluation of MVAPICH2-GPU on the CSCS iDataPlex cluster, evaluation of InfiniBand routing schemes on the 2-D partition of the IDataPlex cluster, Integration of rCUDA (remote CUDA GPU virtualization interface) with SLURM resource management system and JSC I/O prototype evaluations. More about MVAPICH2-GPU can be read at http://mvapich.cse.ohio-state.edu/performance/gpu.shtml

New programming languages and models

- "Programming heterogeneous systems with OpenACC directives" gave example of parallelizing OpenMP code with OpenACC, compared performance results between OpenMP and OpenACC and pointed out how much impact on performance data locality can have. More information about OpenACC can be found at http://www.openacc-standard.org/

- Talk titled "Experiments with porting to UPC" introduced Unified Parallel C (UPC) and presented experiences with porting applications to UPC and results and observations of experiments executed. More about UPC compilers and ported software can be found at following urls:

- Compilers:

- Berkeley UPC (http://upc.lbl.gov/)

- gcc-upc (Intrepid, http://www.gccupc.org)

- UPC HP (http://h30097.www3.hp.com/upc/)

- Ported software:

- Hydro

- Graph500 (http://www.graph500.org/)

- Compilers:

System software, middleware and programming environments

- Rogue Wave presented a talk titled “Productivity and Performance in HPC”, where they described their new performance analysis and optimization tool, called ThreadSpotter, which features automatic finding of possible optimizations and context-driven manual how to perform such optimizations. More information about ThreadSpotter can be found at http://www.roguewave.com/products/threadspotter.aspx

- In the talk about scheduling for heterogeneous resource system, Can Özturan (Bogazici University, Turkey) presented a simulator for testing scheduling algorithm, formulation of integer programming (IP) problem with implementation of IP based scheduling SLURM plugin, and tests by SLURM simulation. The code for the SLURM plugin is available at: http://code.google.com/p/slurm-ipsched/

- The talk titled “System software components for production quality large-scale hybrid cluster for industry and academia” presented a set of system software components, among them HNagios, a customized version of Nagios for HPC, GPFS, a parallel file system, which is compared with LUSTRE, and PBSPro, a resource manager with focus on GPUs.

- Presentation from TU Dresden, about challenges for performance support environments, covered definition of performance and key terms that define it and challenges that software tools face, with an overview of challenges specific for monitoring, storage and presentation tools. It demonstrated how Vampir (http://www.vampir.eu/) handles such challenges and how it compares or integrates with other tools such as TAU (http://tau.uoregon.edu/) and Scalasca (http://www.scalasca.org/).

Hardware

Green HPC (energy efficiency, cooling)

- JKU presented experimental evaluation of an FPGA accelerated prototype, comparing stream and dataflow architecture and energy efficiency in comparison with multicores.

- Presentation of “Measuring Energy-to-Solution on CoolMUC, a hot water cooled MPP Cluster” by LRZ, assessed usage of warm water cooling and possible benefits.

- BSC gave Report on Energy2Solution prototype, which has shown current progress on an ARM+GPU prototype.

- Talk about Cooling and Energy, from PSNC, explained why cooling matters, by showing the yearly costs and proportions between energy input and generated heat, gave overview of various methods of cooling and gave suggestions about using air for isolation rather than cooling.

- In presentation titled Electricity in HPC Data centre, from PSNC, general information about the electrical power distribution were given along with results of a survey among 15 HPC Data centres across Europe, presenting running costs, and providing some recommendations:

- Secure at least two independent main supplies

- Use MV power lines for small/middle size Data Centres and HV ( 110 - 137 kV ) power lines for larger one

- Assume modular design

- Always ensure future expansion (of 20 to 25 percent spare capacity)

- Use low-loss equipment

- Use distributed UPS ( ultra capacitors ) and ATS switch instead of Static UPS

- Use USP + Diesel backup and „N+1” redundancy only for „critical systems”

- Maintenance is a key to safe and smooth HPC Data Center operation (important to have scheduled and detailed maintenance and operational plan as well as technical condition assessment at least once per year)

- Importance of safety (providing a comprehensive training for the staff)

- Allways try to negotiate energy price

Processor architectures

- Evaluation of hybrid CPU/GPU cluster, by CaSTorC, presented results of evaluating prototype GPU cluster, which included investigation of programming models, paradigms and techniques for multi-GPGPU programming, new developments in interconnection of GPUs and evaluation of power efficiency in terms of energy required to solve a given problem.

- Presentation of Evaluation of AMD GPUs, from PSNC, gave evaluation of new AMD Accelerated Processing Units (APU) which are x86 cores with GPU on single chip, concluding that Zero-copy worked great for tightly coupled gpu-cpu code.

Interconnect technologies

- The talk about evolution and perspective of topology based interconnect, from Eurotech (http://www.eurotech.com/), gave an overview of copper and optical interconnect technology, followed by coverage of interconnect topologies focusing on fat tree and 3D-torus. The rest of the talk covered 3D-torus in FPGA, IB-based 3D torus and next gen FPGA-based 3D torus.

- Presentation from CEA and CINES, titled Exascale I/O prototype evaluation results, has shown results of benchmarking various storage systems, presenting its findings about Xyratex RAID engine and embedded server I/O model. More information about Xyratex can be found at http://www.xyratex.com/

Memory and storage units

- The presentation by Aad van der Steen, titled "An (incomplete) survey of future memory technologies", surveyed a number of memory technologies, that might help ease speed mismatch between cpu and memory, and have better cost, durability, reliability, lower power consumption, size, and being nonvolatile. Survey covered P(C)RAM, MRAM, Memristors (RRAM), Racetrack memory and graphene memory

Miscellaneous

- Intel’s presentation on overcoming the barriers to exascale through innovation, gave an overview of main obstacles in the way of Exascale, and insight in some of its contributions towards overcoming them. More about Intel European Labs can be found at: http://www.exascale-labs.eu

- Talk by Jeffrey Vetter from Oak Ridge and Georgia Tech, titled Scalable heterogeneous computing & NVRAM, was pointed out how extreme projections for Exascale architectures are in terms of scalability to 1 billion of threads, movement to diverse, heterogeneous architectures and memory capacity and bandwidth. GPUs and NVRAM were covered as the technologies that may offer a solution. Contributions for the project this talk described were received from following entities:

- DOE Vancouver Project (software stack for productive programming on scalable heterogeneous architectures) https://ft.ornl.gov/trac/vancouver

- DOE Blackcomb Project: https://ft.ornl.gov/trac/blackcomb

- DOE ExMatEx Codesign Center: http://codesign.lanl.gov/

- DOE Cesar Codesign Center: http://cesar.mcs.anl.gov/

- DOE Exascale Efforts: http://science.energy.gov/ascr/research/computer-science/

- Scalable Heterogeneous Computing Benchmark team: http://bit.ly/shocmarx

- International Exascale Software Project: http://www.exascale.org/iesp/Main_Page

- SHOC: http://ft.ornl.gov/doku/shoc/start

- ASCR Workshops and Conferences, Scientific Grand Challenges Workshop Series: http://science.energy.gov/ascr/news-and-resources/workshops-and-conferences/grand-challenges/

SC 2012

The International Conference for High Performance Computing 2012, 10-16 November 2012, Salt Lake City, Utah

- Website: http://sc12.supercomputing.org

- Responsible partner: NIIFI

Technical digest:

ISC 2012

International Supercomputing Conference 2012, 17-21 June 2012, Hamburg, Germany

- Website: http://www.isc-events.com/isc12

- Responsible partner: IPB

Technical digest:

Good overview of new technologies by HPC vendors: http://lecture2go.uni-hamburg.de/konferenzen/-/k/13747

RSS feeds

HPC journals

HPC wire

|

insideHPC

European projects

EMI

|

EGI

|

PRACE

|

HPC vendors

- IBM: http://www-03.ibm.com/systems/technicalcomputing/

- HP: http://h20311.www2.hp.com/hpc/us/en/hpc-index.html

- Dell: http://content.dell.com/us/en/enterprise/hpcc

- NEC: http://www.nec.com/en/de/en/prod/solutions/hpc-solutions/index.html

- SGI: http://www.sgi.com/products/servers

- Hitachi: http://www.hitachi.co.jp/Prod/comp/hpc/

- Fujitsu: http://www.fujitsu.com/global/services/solutions/tc/

- Cray Inc.: http://www.cray.com/

- Intel: http://www.intel.com/content/www/us/en/high-performance-computing/server-reliability.html

- Oracle: http://www.oracle.com/

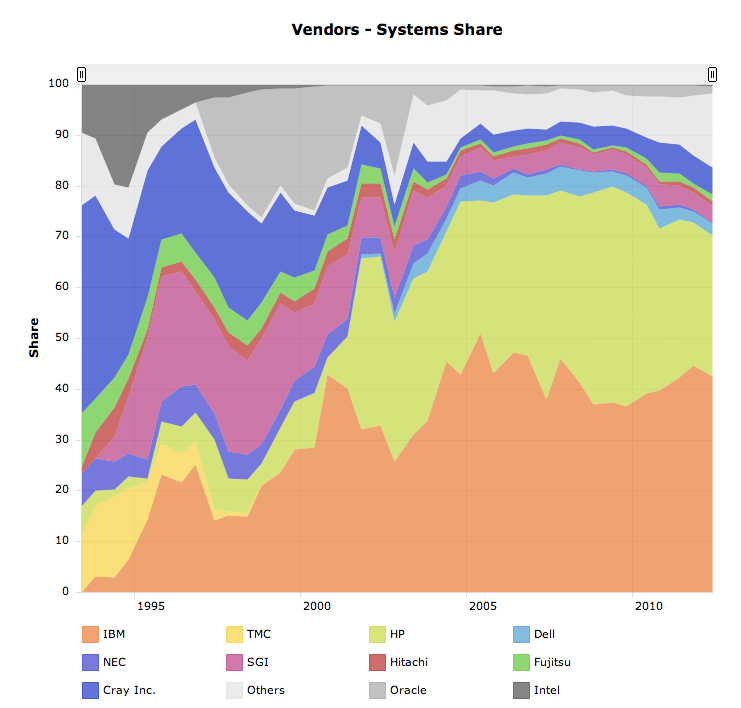

Source: http://i.top500.org/overtime

Vendor responses about technical trends

Source: http://survey.ipb.ac.rs/index.php?sid=82412&lang=en

HPC architecture (Commodity Cluster, Custom based solutions (mainframe)

We are sensing that the difference between the classic mainframe based resources (SMPs, NUMA systems) and the PC-based clusters has been dissolving as technological elements are mutually transferred between the two.

- How do you see this trend?

- Vendor 1: Yes, agree. However, this trend will reach its limit in future.

- Do you think that the clustered environments will replace the classic mainframe models?

- Vendor 1: PC-based clusters will not be able to replace classic mainframe resources at least for the next 5 years. Both will be always needed for certain environments.

- Or do you think that the classic mainframe architectures will survive within the multi-core server architectures?

- Vendor 1: Yes, classic mainframe architectures will survive within the multi-core server architectures because mainframe resources also implement such technologies.

Processor architectures

The average number of cores in nodes has been increasing.

- Do you think that this trend will continue in the future?

- Vendor 1: Yes, definitely.

- What do you think to be the largest core number in a single server in a year?

- Vendor 1: 96

- Do you think that the increasing number of cores will not contribute to the performance increase sufficiently?

- Vendor 1: Increasing number of cores will contribute to the performance increase sufficiently.

- Do you think that AMD and Intel will dominate the HPC market?

- Vendor 1: Yes.

- Do you think that the graphics processors will keep representing an increasing share in the CPU portfolio?

- Vendor 1: Yes, but this inclrese will go slower.

Interconnect technologies

- What do you think to be the most dominant interconnection platform in HPC?

- Vendor 1: Infiniband

Operating system

HPC is traditionally performed in Linux/Unix environments. Nevertheless Microsoft keeps pushing in this area as well.

- Do you think that the amount of MS Windows operated centers will increase in the future?

- Vendor 1: Not much.

- Do you think that Linux will outperform other Unix solutions in this field?

- Vendor 1: Yes.

Virtualization

IaaS clouds are used for decoupling operating systems from the physicalhardware.

- Do you think that such virtualization technologies will show up in HPC to use the hardware platforms more efficiently?

- Vendor 1: Yes, they will.

Energy efficiency

While 10 years ago it was even a marginal question how much power is needed to run a site, today it is one of the most important price factors parallel to the initial cost. Water cooling helps saving 10-30% of the energy spent on the operating environment, thus different forms of water cooling (racks, server rooms, cooling towers) have begun to appear. Other forms of energy savings are also supported, such as self-standby operations, and power limitations on CPUs.

- Do you think that water cooling will gain significance in the future data centers?

- Vendor 1: Yes, but price will be the driving component to what extend water cooling will gain significance.

Useful links

- http://www.deisa.eu/news_press/symposium/Amsterdam2009/presentations/pr_de_talk_vdsteen.pdf

- http://www.prace-ri.eu/IMG/pdf/Huber-PRACE-1IP-Industrial-Seminar-2011-03-28.pdf

- http://www.prace-ri.eu/IMG/pdf/D8-3-2-extended.pdf

- http://www.prace-ri.eu/IMG/pdf/13-Presentation_WP8_PRACE_IndustrySeminar.pdf

- http://innopedia.wikidot.com/technology-watch

- http://www.prace-ri.eu/Organisation

- http://www.prace-ri.eu/Members

- http://www.hpcwire.com/

- http://insidehpc.com/

- http://green500.org/

- http://top500.org/

- http://www.isc-events.com/